I’ve been teaching “Agile Projects” (yes, the title does feel a bit strange) to undergraduate students for a few years, and I’ve always took that opportunity to spend half a day on TDD. That meant me demo’ing a test-first implementation of FizzBuzz, then letting the students take a stab at the Fibonacci series. Yes, all very run-of-the-mill stuff. Understandably, I was always worried that concepts would not be really understood.

This year, I decided to try out Cyber Dojo, a website by Jon Jagger that is essentially a bare-bone IDE, streamlined for TDD. For this, I baited the students by offering bonus exam points for those who submitted the exercise. Also, the exercise was to be done on their own time.

Cyber Dojo is not particularly designed for running exams, and it was lacking around the edges, but it does seem to do the job.

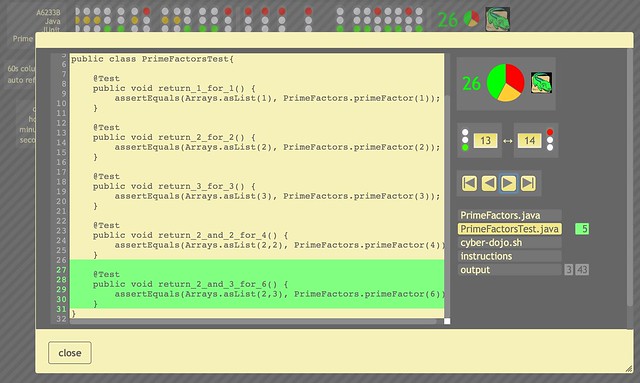

The way it works is by letting the student selects a pre-packaged environment (Java+JUnit, say, or PHP+PHPUnit, or even Erlang+eunit). They would also chose a particular kata from a list of 20 or so, made of well-known exercises. The environment is set up with a simple code file, a test file, and an instruction file. There is also a file containing the output of the test execution and a script for the adventurous who wants to tune how the tests are run. That’s it. No refactoring tools, no syntax highlighting, no reformatting. Just you and the code.

All the student can do, after modifying code, is run the tests. The results are recorded in the output file, and a traffic light icon is displayed in red, yellow or green. It is up to the student to decide when the kata has been completed.

The plan has been to point them to the site, and ask them to email me the identifier for their exercise: the “dojo id” and “animal name”. It is possible for several students to share a dojo, but I figured that encouraging them to start a new dojo each would reduce the risk of cheating by copy/paste.

Upon receiving the dojo details, I would review the sequence of red/green lights (an ideal series would be a repeating sequence of red/green/green, representing writing a test that fails/writing code that passes the test/refactoring), then read the code modified at each step. This would let me see whether the code was truly designed using baby steps — and would let me easily weed out those who copy & pasted the solution from the internet.

Finally, I would then write up my thoughts and email them back to the student. Those who did the exercise early had a chance to do it again, with the benefit of my comments.

One drawback of reviewing TDD exercises, is the time it takes to review the results. It takes me almost 20 mins to read, assign scores, and send comments to the students. With 80 students, that would in theory take more than 24 hours of work. I mitigated this by making the exercise optional and also allowing students to work in pairs. I still got a good 30 to review. What I saw was not very flattering to me as a teacher… but that would be a topic for another post.